New Year and a new Atomic Scope release! We are bringing some features to strengthen the core areas of the product to improve the tracking performance and reliability for its users.

Over the past few months, we have been facing inconsistencies in tracking where some of the activities are gone missing or remain ‘In Progress’. Being a Business Process Monitoring tool, it is highly important for Atomic Scope not to miss any activities or messages from customers. So we were deeply investigating what was causing this problem.

The Problem with Tracking in Event Hub

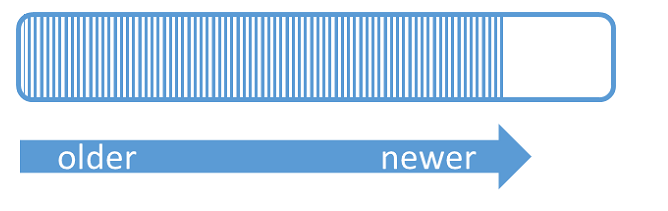

After a lot of investigations, we found out that the Event Hub was causing this problem. From our APIs, the customers throw events like Start/Update events, Archive messages, and throw Exceptions. We need to maintain the order of these events, even when exposed to a heavy load. But the order of these events is not guaranteed with Event Hubs. That sometimes resulted in not being able to properly show transactions in the Atomic Scope portal.

Some Research & Development

The team started looking deeply into some other alternatives which we could use for our scenario. We looked into:

- Event Hub Partitions

- Durable Functions

- Service Bus Sessions

Event Hub Partitions

We were already aware of a concept called Event Hub partitions which may be of help.

To support our use case, we needed to dynamically add more Event Hub partitions as the load increases and process it in the same order. To create a unique partition we need a unique Identifier. However, there is no way to inform the on-premise Atomic Scope event processing service what IDs are being created dynamically. So we concluded that this approach of using Event Hub partitions did not work for us.

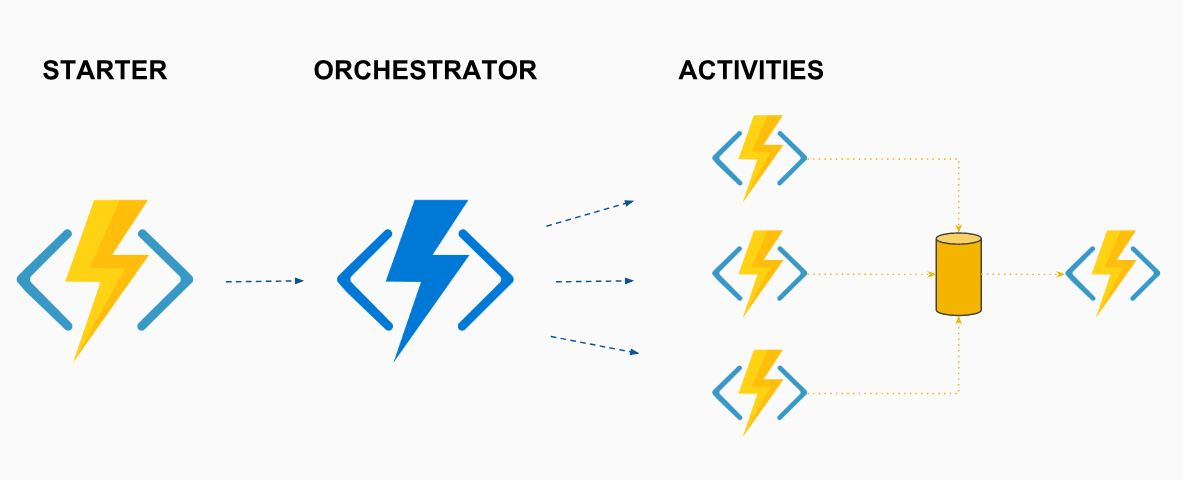

Durable Functions

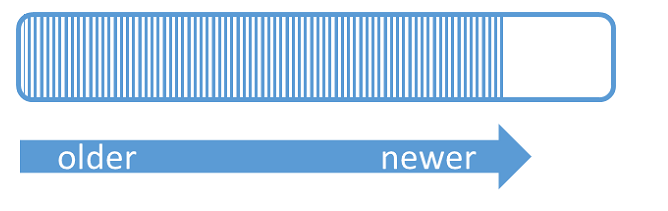

Atomic Scope’s connector uses Azure Function hosted APIs to process the 4 different actions that we offer in our connector, namely Start/Update transaction, Log Exception, and Archive message. Durable Functions are quite popular for orchestrating cloud-based events on Azure Functions.

To learn if we could use durable functions, we had a great start watching

Jeff Hollan’s session. We straightaway did some POC’s to see if it works for us. Since we have 4 different HTTP exposed endpoints that are listening to the connectors, durable functions gave us only 1 exposed endpoint.

We had to manually combine all events and then send them to the service. It looked like it worked, but we did not know how many events we should listen for. We also don’t know if a customer is using all 4 actions, or 3, or less. That is why we decided to skip this solution as well and went further.

Good Hope?

We started reading some cloud patterns on Microsoft. On further research, the team found this amazing thread on

Stackoverflow. The original question is more than 2 years old. Although the thread had only 1 answer with 0 Votes, we thought to give it a try and started reading about Service Bus sessions.

We started the POC after doing an excellent read from

Jeff Hollan’s article regarding this topic.

Service Bus Sessions to the rescue!!!

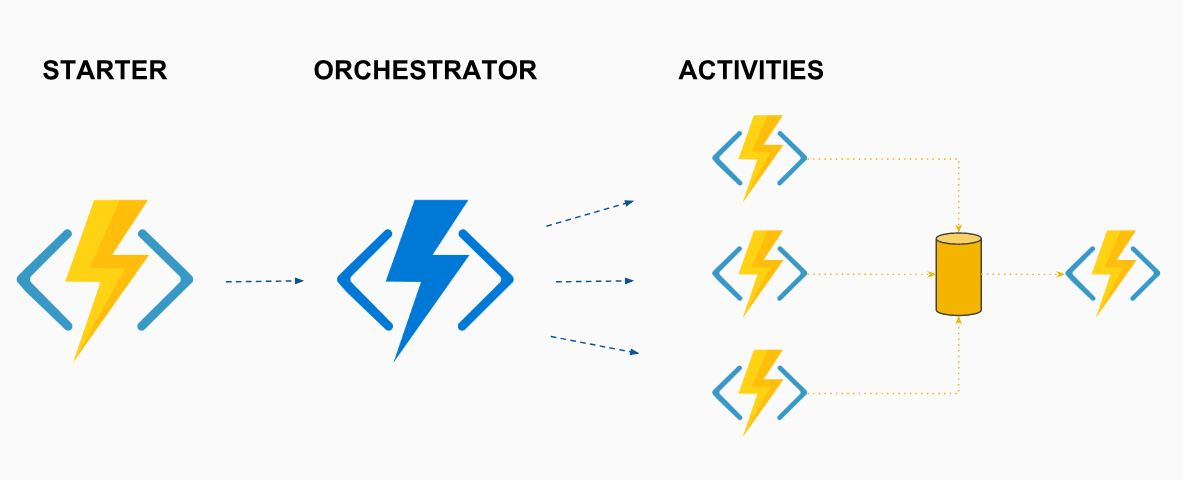

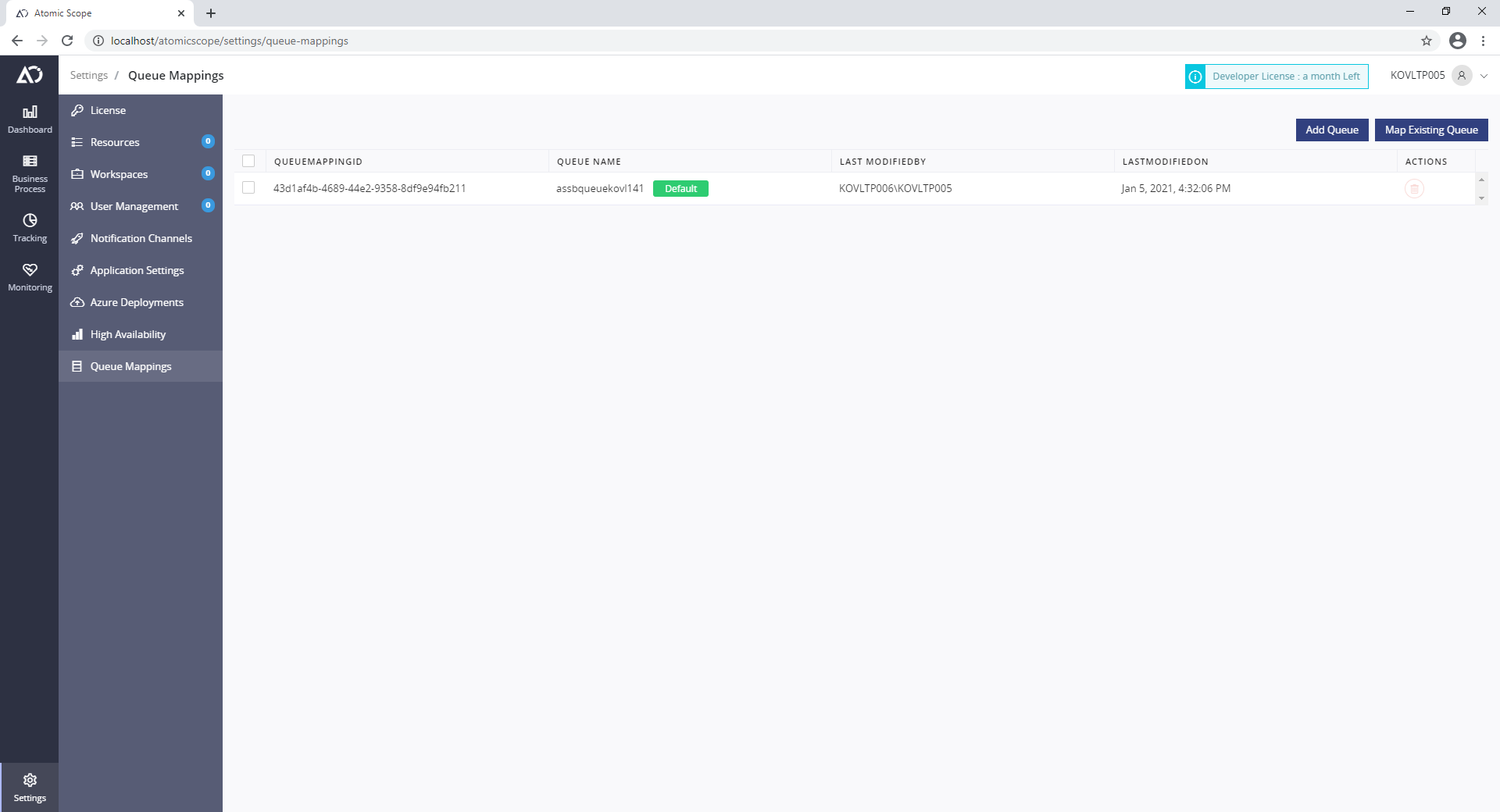

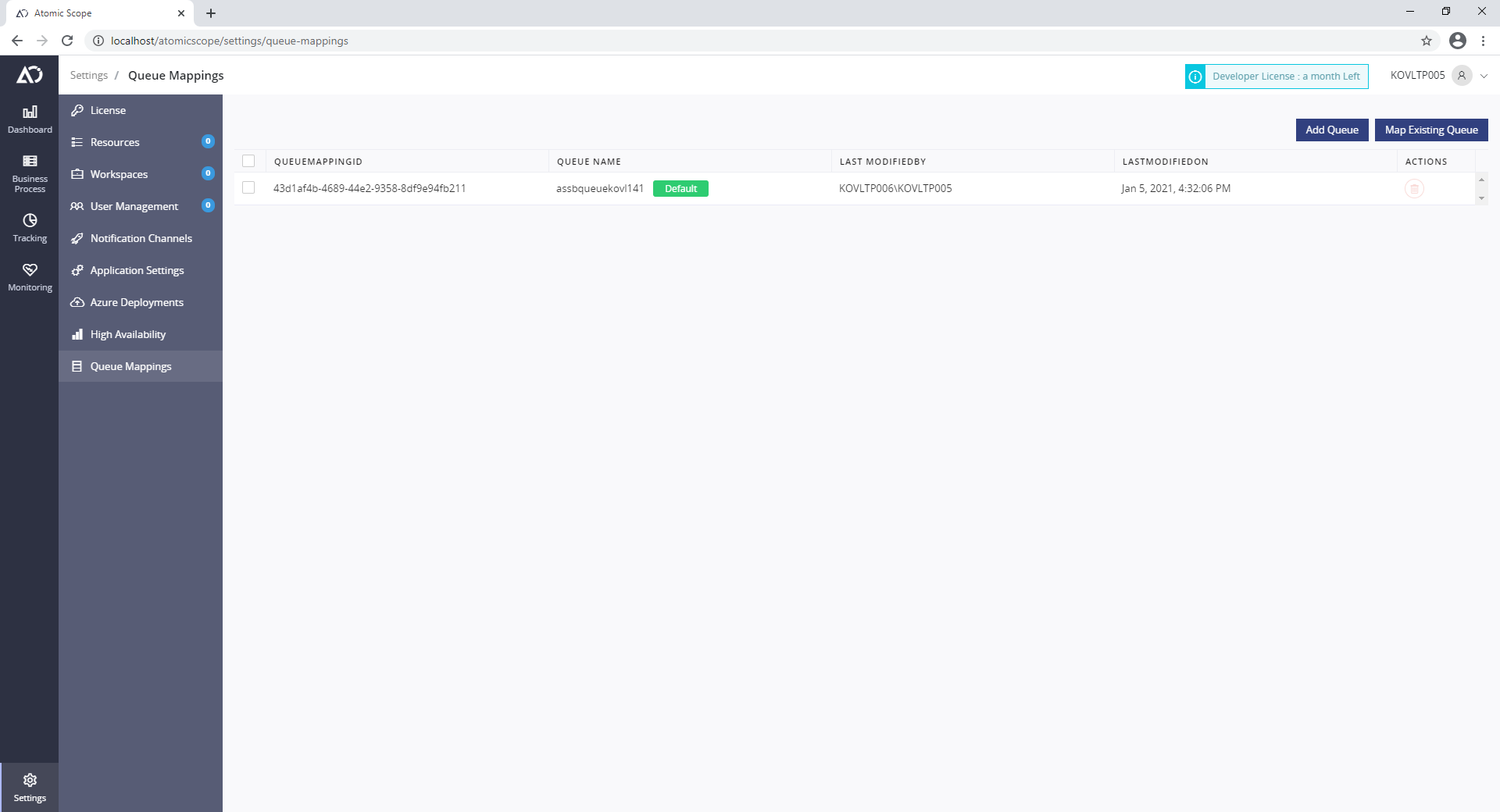

To handle a high volume of messages, from v7.5, under the Atomic Scope settings section, you will find a new page called Queue Mappings. Here you can add up to a maximum of 10 Queues to be able to handle the load. By default, when you upgrade or install Atomic Scope, once the Azure architecture is deployed, it will have one Queue by default.

You can either choose to add new queues or map existing queues.

Now, in order to handle high load across transactions, you need to configure Atomic Scope which queue should be used for such high volume transactions.

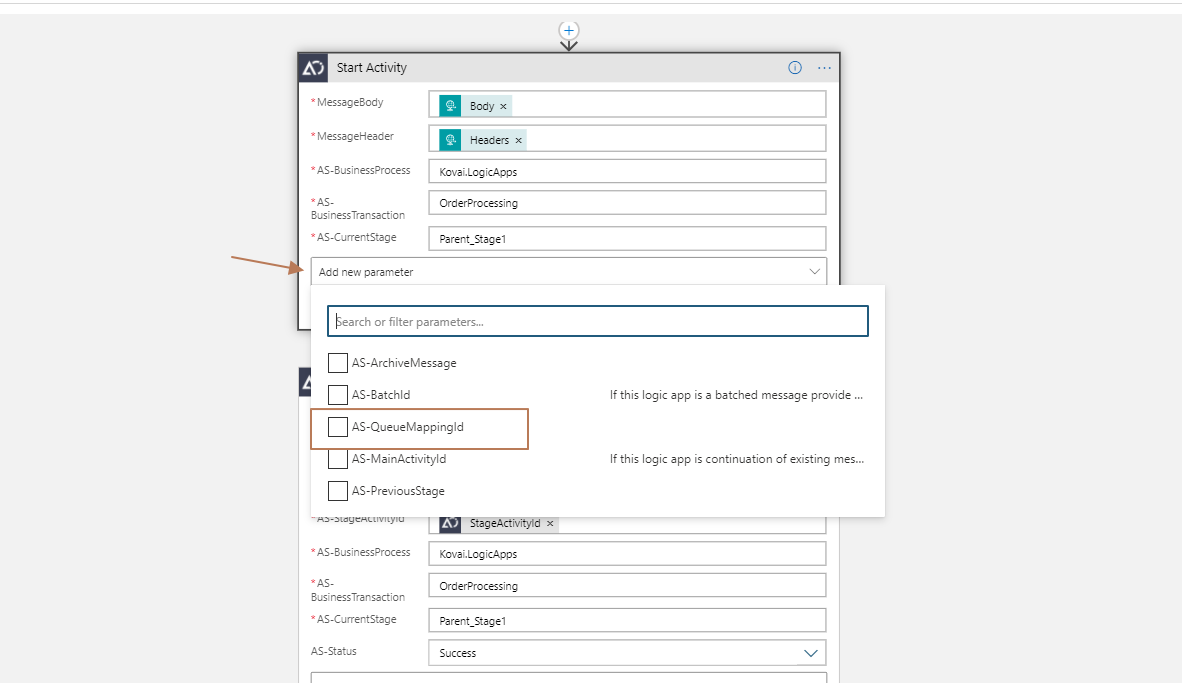

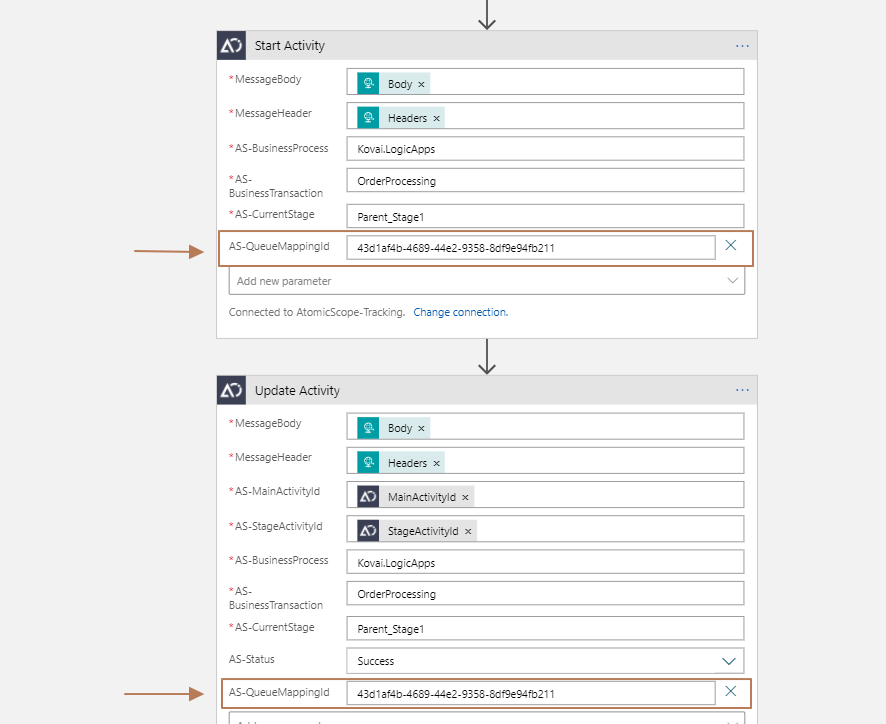

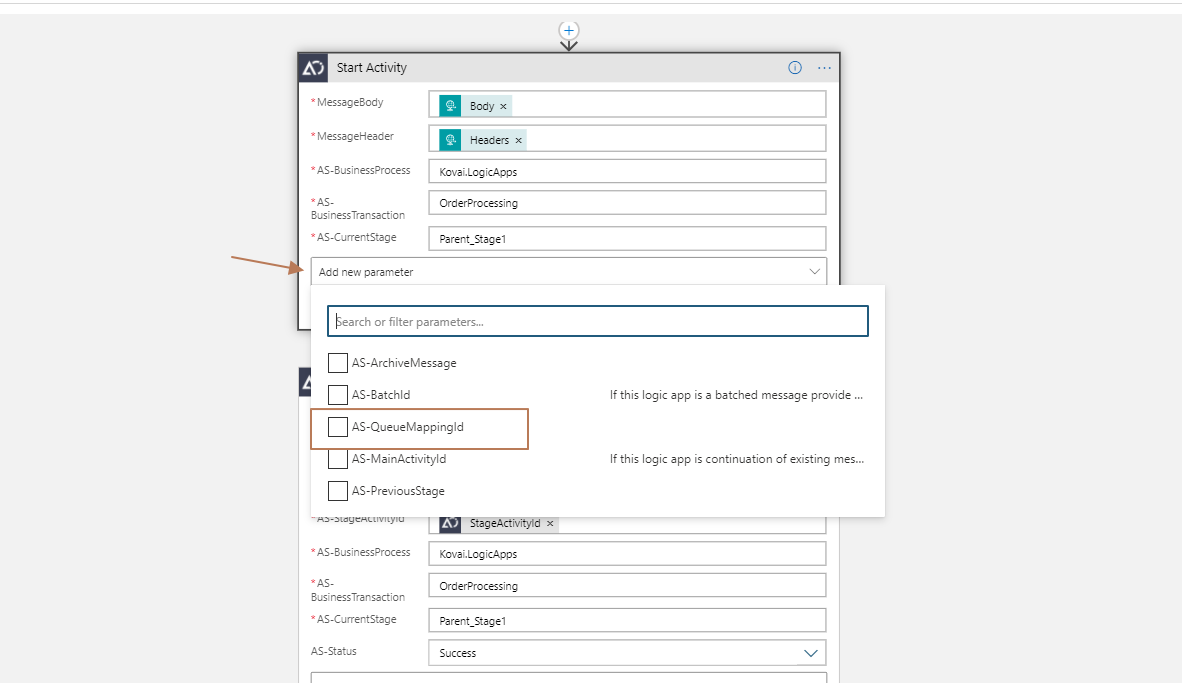

Tracking Configuration in Logic App

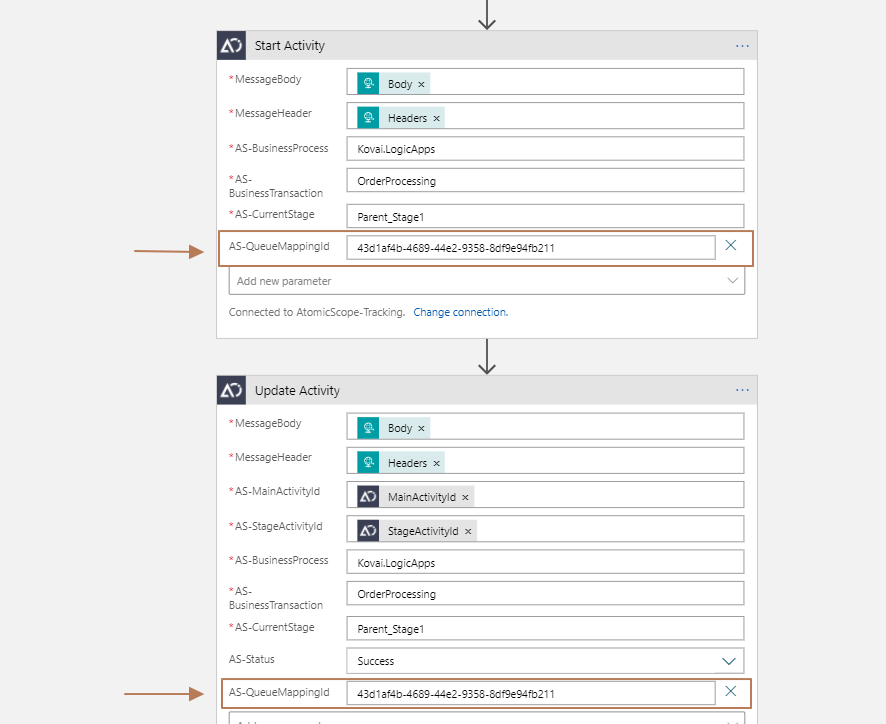

Let us assume a transaction called Order Processing that generates a high volume, and you’ve added a new Service Bus queue in the Atomic Scope portal. To handle the load, copy the respective

QueueMappingId from the Atomic Scope queue mapping table and pass it on to the connector like shown in the below screenshots.

If you have x number of actions for a particular transaction called OrderProcessing, you need to send the same

QueueMappingId across the actions to ensure the load is handled seamlessly. But to ensure we don’t miss any activities, even if you forgot to send the id, the activity will automatically be reverted to the default queue.

For more info, you can read

Queue Mapping Docs in the Documentation Portal.

To support our use case, we needed to dynamically add more Event Hub partitions as the load increases and process it in the same order. To create a unique partition we need a unique Identifier. However, there is no way to inform the on-premise Atomic Scope event processing service what IDs are being created dynamically. So we concluded that this approach of using Event Hub partitions did not work for us.

To support our use case, we needed to dynamically add more Event Hub partitions as the load increases and process it in the same order. To create a unique partition we need a unique Identifier. However, there is no way to inform the on-premise Atomic Scope event processing service what IDs are being created dynamically. So we concluded that this approach of using Event Hub partitions did not work for us.

To learn if we could use durable functions, we had a great start watching Jeff Hollan’s session. We straightaway did some POC’s to see if it works for us. Since we have 4 different HTTP exposed endpoints that are listening to the connectors, durable functions gave us only 1 exposed endpoint.

We had to manually combine all events and then send them to the service. It looked like it worked, but we did not know how many events we should listen for. We also don’t know if a customer is using all 4 actions, or 3, or less. That is why we decided to skip this solution as well and went further.

To learn if we could use durable functions, we had a great start watching Jeff Hollan’s session. We straightaway did some POC’s to see if it works for us. Since we have 4 different HTTP exposed endpoints that are listening to the connectors, durable functions gave us only 1 exposed endpoint.

We had to manually combine all events and then send them to the service. It looked like it worked, but we did not know how many events we should listen for. We also don’t know if a customer is using all 4 actions, or 3, or less. That is why we decided to skip this solution as well and went further.

You can either choose to add new queues or map existing queues.

Now, in order to handle high load across transactions, you need to configure Atomic Scope which queue should be used for such high volume transactions.

You can either choose to add new queues or map existing queues.

Now, in order to handle high load across transactions, you need to configure Atomic Scope which queue should be used for such high volume transactions.

If you have x number of actions for a particular transaction called OrderProcessing, you need to send the same QueueMappingId across the actions to ensure the load is handled seamlessly. But to ensure we don’t miss any activities, even if you forgot to send the id, the activity will automatically be reverted to the default queue.

For more info, you can read Queue Mapping Docs in the Documentation Portal.

If you have x number of actions for a particular transaction called OrderProcessing, you need to send the same QueueMappingId across the actions to ensure the load is handled seamlessly. But to ensure we don’t miss any activities, even if you forgot to send the id, the activity will automatically be reverted to the default queue.

For more info, you can read Queue Mapping Docs in the Documentation Portal.